The Metropolitan Police arresting over 500 individuals on Saturday 9th August under the Terrorism Act 2000 was seen by some as a heavy handed approach. There was significant pushback from groups across the UK, most notably the Equality and Human Rights Commission (EHRC), who warned of the effect that disproportionate police action has in undermining confidence in the UK’s human rights protections.

In a similar vein, the EHRC will be permitted to intervene in an upcoming judicial review examining the Met Police’s use of the Live Facial Recognition Technology (LFRT). Their criticism of the Met’s continued use of the system echoes the Court of Appeal’s findings in R (Bridges) v Chief Constable of South Wales Police. In this instance, the police’s use of LFRT at public events was challenged and found to be unlawful. However, it was only the application of LFRT without appropriate safeguards to prevent discrimination that was deemed unlawful, and not the technology itself.

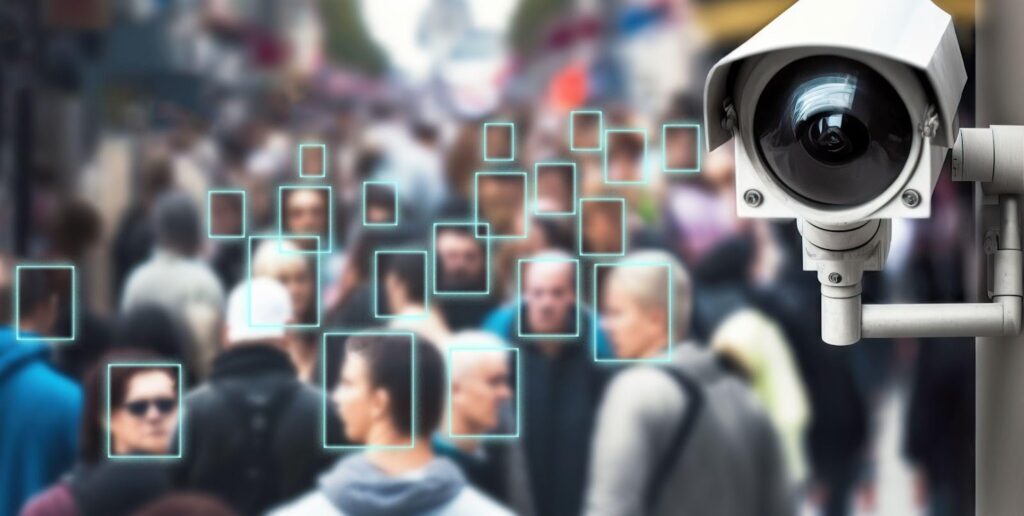

What is Live Facial Recognition Technology and why is it Controversial?

LFRT is a system that scans the faces of people in public spaces and compares them against a watchlist of individuals. The technology can, in theory, help identify suspects quickly. However, in practice, it raises serious concerns about accuracy, proportionality, and bias.

Despite falling afoul of the Data Protection 2018 in Bridges, police forces have continued to operate LFRT systems. In 2024, over 4.7 million faces were scanned. This doubled the previous count for 2023. The expansion suggests that, rather than curbing the technology’s use after the Bridges case, police have treated the ruling as partial validation, continuing to cite it as justification for broader rollouts.

Who is Shaun Thompson and why is his case important?

At the heart of the new judicial review is Shaun Thompson, a volunteer who was mistakenly flagged by LFRT. Thompson was returning from a volunteering shift when he was flagged on the Met Police’s LFRT system outside of London Bridge station. He was held by officers for 30 minutes who demanded scans of his fingerprints and threatened to arrest him, despite him showing ID to prove that he was not the individual identified by the cameras.

This was not an isolated mishap. Data shows that black men are disproportionately flagged by LFRT systems, a troubling pattern that undermines public trust and highl;ights structural bias in algorithmic policing. Thompson’s case is significant,not only for what happened to him, but also for how it illustrates systemic flaws in the technology’s deployment. His challenge is what opened the door for the EHRC to intervene, framing the review as not merely about policing efficiency, but about fundamental human rights.

How big is the risk of false reports?

Despite the staggering potential scale of false identifications, the police have continued to rely on the system. Most impactful on the number of false reports is when there is a large watchlist. According to the Metropolitan Police’s own Data Protection Impact Assessment (DPIA), watchlists can contain up to 10,000 individuals’ biometric profiles. With an error rate of 1 in 6000, such lists already risk producing false alerts.

If watchlists were to be expanded in line with the police’s increased use of the system, say to the population of London at around 9 million people, then that would lead to 1500 false results. Each one of these representing a real person wrongly stopped, questioned, or even detained.

Beyond the numbers lies the human impact: the anxiety, humiliation, and erosion of trust that comes from being falsely flagged. When these mistakes disproportionately affect minority groups, the problem shifts from being a technical glitch to a civil rights issue.

How does the EU’s AI Act differ from the UK’s approach?

The EHRC rightfully points out that under the EU’s AI Act, such a use of this technology would be deemed high-risk. The EU Act actually bans the use of this identification in public spaces by law enforcement, save for narrow exemptions tied to sixteen specific serious crimes. Even in those limited cases, the deployment of LFRT must be authorised by either a judge, or an independent administrative body. Furthermore, if meeting the criteria to go ahead, the use of LFRT would need to be approved by either judicial or independent administrative authority.

The UK, by comparison, operated with looser oversight. There is no equivalent high-risk categorisation or outright ban. Instead, the system is left largely to the discretion of police forces, with minimal intervention from regulators. The EHRC’s intervention in the upcoming judicial review therefore becomes all the more important, introducing a level of scrutiny otherwise missing from the UK landscape.

Where is the ICO in all of this?

Unlike the EHRC, which is participating in the judicial review, the Information Commissioner’s Office (ICO) has contributed little of practical value. Established to enforce the Data Protection Act 2018, the ICO has the legal mandate to oversee how biometric data is used. Yet, its interventions on LFRT have been limited to broad statements that appropriate safeguards must be in place – a point already made by the Court of Appeal in 2020.

The ICO’s absence from the judicial review is somewhat surprising. For a regulator whose role is to ensure compliance with data protection laws, its lack of visible involvement in what could become a pivotal assessment of AI’s role in the UK’s public sector, risks diminishing public confidence in its authority.

What could this judicial review mean for the future of AI in policing?

When the case is heard in January 2026, it will not just decide whether Shaun Thompson was wrongfully stopped, it will set the tone for how AI-driven technologies are used by police and other public bodies in the UK. Given the EHRC’s vocal critiques of the use of LFRT since its introduction, this approach may be preferable to involving a regulator which prefers a quiet audit in South Wales to the fiery exchange of a London courtroom. If the court agrees with the EHRC’s concerns, we may see stricter limits placed on LFRT, closer alignment with European standards, and a stronger emphasis on human rights protections.

For now, the EHRC’s intervention offers a glimpse of what robust oversight could look like: one that does not simply accept technological inevitability but demands that innovation serves the public fairly and lawfully. Whether this approach will prevail is unknown, but the stakes could not be higher. The outcome will help define the relationship between AI, policing, and human rights for years to come.